Agents as a Service? Navigating a new paradigm before it unfolds.

Exploring the world of agents as a service, what should we expect to see as this industry unfolds?

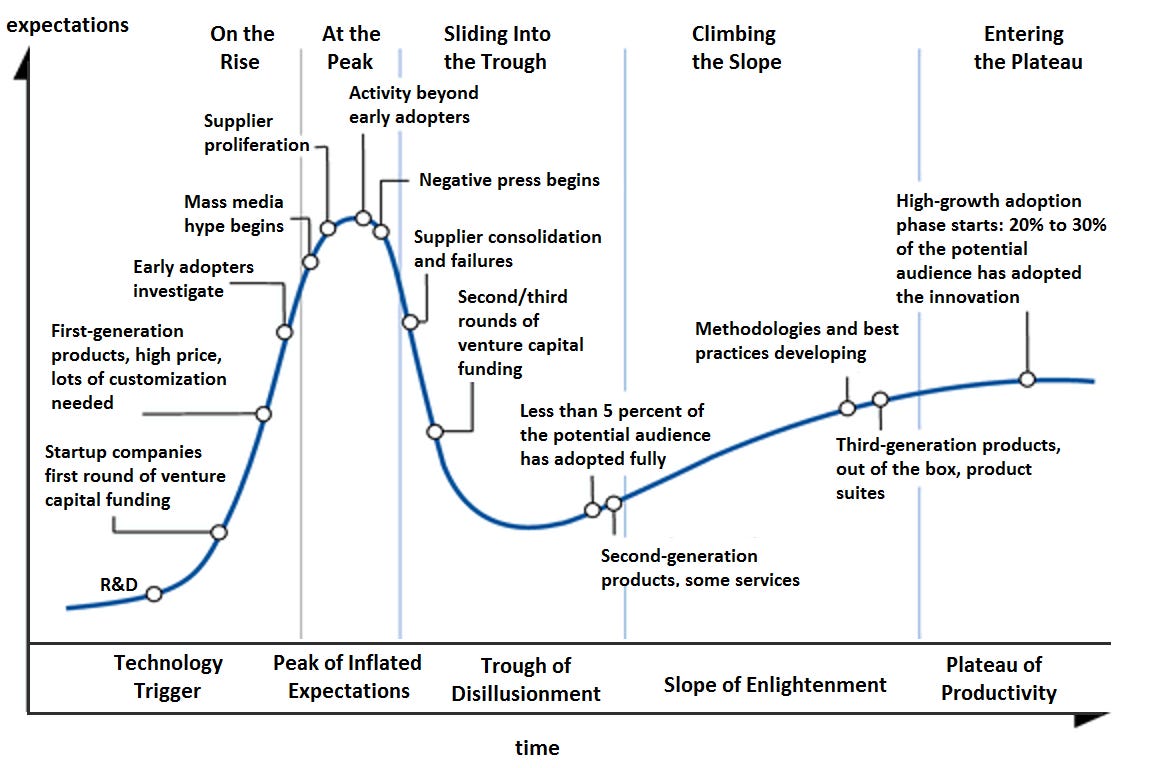

The Gartner Hype Cycle is a visualization and concept developed by research firm Gartner which represents the development, maturity, and adoption of technologies. It looks like this.

If this looks a little complicated, that’s because it is. The Gartner hype cycle has been thoroughly analyzed, and elaborated upon with the goal of providing insight into the maturation of technology. However, while this concept can be applied at multiple levels of analysis, the core of the visualization breaks down into five key phases:

Technology Trigger

Peak of Inflated Expectations

Trough of Disillusionment

Slope of Enlightenment

Plateau of Productivity

Almost every major technological advancement passes through these five phases. 3D printing, for example, first emerged in the 1980s, but gained mainstream attention in the 2000s with promises of revolutionized manufacturing. By the early 2010s, 3D printing was hyped as a majorly disruptive technology, which would allow consumers to print anything from the comfort of their own home. Shoes, complex electronics, and much more was to be entirely within the manufacturing capacity of the average consumer.

So why aren’t we all 3D printing our shoes? Well, as it turned out, this promise of decentralized production was a bit overblown. Complex products such as shoes, silicon chipsets, or computers and phones are simply out of scope for consumer-grade additive manufacturing technology. Of course, it wasn’t all wrong. 3D printing has gained popularity within consumer markets, and given rise to a thriving ecosystem of toys, gadgets, and products which consumer-grade 3D printers are capable of producing.

This hype cycle plays out time and time again, through various technological advancements and innovations. A new technology is demonstrated to consumers, promises are made and imaginations run wild, until practicality sets in and ideas become more realistic as the real value of the technology is realized. There are some criticisms of the cycle. It’s not particularly “scientific”, in that it doesn’t necessarily rely on empirical data, and “hype” is a largely subjective metric.

In reality, it might be best to think of the Gartner hype cycle as a useful metaphor, rather than something on which to base your stock portfolio directly. However, it is useful. In November 2022, an alleged research firm called OpenAI released their latest experiment, a language-model-based application called ChatGPT. The hype supernova that ensued is probably the most tangible example of a hype cycle in recent history. We were all there two years ago, hearing announcements of the “next step towards AGI”. Who knew when the mystical singularity would grant us with its presence?

Would the superintelligent robots drive humanity forward into a new utopian age? Or would humanity have to fight for its survival and attempt to justify its presence to a resentful robot revolution? The future was hazy with opportunity in the face of mass technological innovation, and the limitless potential and promise of the future was upon us. On one hand, it’s likely that the wise among us saw through this, and bypassed the inflated expectations of the early AI boom. However, for myself, the excitement of it all was intoxicating, and there was a time when I legitimately believed that my job would be entirely automated by some new startup within a year.

There have been attempts, but it’s safe to say I was wrong about that. Going back to that hype cycle we talked about, where are we now with “AI”? 2022 wasn’t that long ago, but it has been two years. In that time we’ve made the journey to the peak of inflated expectations, down into the trough of disillusionment, and are well on our way up the slope of enlightenment, towards the plateau of productivity. In other words, this is the stage in our hype cycle where we can expect to start seeing the real value of this fascinating new technology.

That value comes in many forms, some better than others. We’ve seen AI integrated into applications left and right as companies scramble to capitalize on the latest tech. However, there is one area of development that has captivated my attention more than others. An attempt to reverse engineer human productivity and anthropomorphize machines. I’m prepared to hazard a guess that upon the plateau of productivity, we shall find autonomous agents.

Some background

Broadly speaking, an “agent” is an entity in an environment. You’re an agent, I’m an agent, your cat is an agent. An agent has an agency, and acts in accordance to that agency. Given this broad definition, a wide range of applications probably could be called agents. ChatGPT is a recognizable example, along with its vast swath of competitors and derivatives. However, something like ChatGPT might not really be what we mean.

ChatGPT is a passive application, responding to user queries, but not really perusing its environment through an initiative of its own. When I hear “agent”, I’m thinking of a sort of creature, navigating the completion of its task through reasoning and tool-use. I’m talking about the kind of machine that we’re all excited about, the type of machine that can do our jobs for us someday.

A robot story

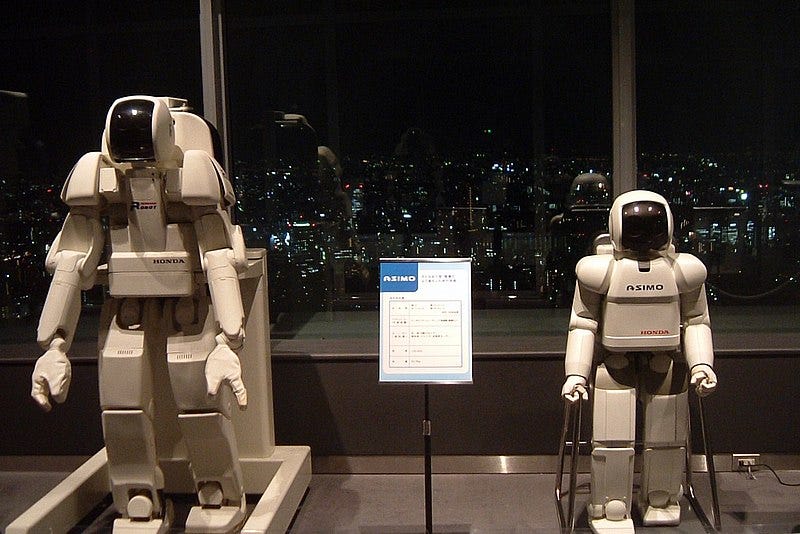

Of course, we’ve seen these kinds of machines in action for a while. I remember being around 12 when I saw a fascinating demo of a humanoid robot, called ASIMO.

This cute little robot came out onto a stage pushing a cart complete with a water bottle and a cup. ASIMO then proceeded to pick up the water bottle, unscrew the cap, and fill the cup impeccably with water. Unfortunately, in 2018, Honda announced that all development on ASIMO would cease, and resources devoted to more practical applications of the ASIMO technology.

ASIMO wasn’t the first of its kind. In fact, Honda began developing humanoid robots as far back as the 1980s, and made significant progress during that time.

However, while ASIMO was a fascinating look into the bleeding edge of its time, it was never much more than an experiment. A very successful one, but not the type of creation that directly benefits consumers or organizations. However, the future presented through these ASIMO demos does paint a picture for us. That is, robots going about doing our mundane tasks, so that we can relax, and focus on more important things.

Modern agents

The main advancement in agents of the past decade, has been in the performance of automated reasoning. That’s not to say that other advancements haven’t been made, of course. However, a long line of iterative development in machine learning has brought us to the point where human-like conversations with machines are commonplace. The technology isn’t new, it’s just finally good enough for people to find it valuable. Modern models are capable of complex reasoning, and although performance is still questionable in various domains, it’s at least human-comparable.

This human comparable reasoning has lead to an entirely new field of endeavor, focused on LLM-based autonomous agents. First on the scene were projects like AutoGPT, BabyAGI, and GPT-Engineer, which emerged as primary examples of what a language-model-based agent could be. AutoGPT in particular is one that I initially found quite fascinating, as it was capable not only of sustaining a conversation, but also of utilizing various tools to complete tasks from my command line.

In more modern times, several projects, such as Microsoft’s Autogen or the CrewAI framework, aim to ease production of agents, making development more practical. I’ll even shamelessly plug my own project, Simple Agent, which is a template for building advanced autonomous agents, without starting from scratch.

Build Powerful Autonomous Agents Simply - Introduction to Simple Agent

(Author’s Note: this video was initially published to YouTube here.)

However, work on autonomous agents is not constrained to GitHub projects. In fact, the corporate world see’s potential here as well.

Agents as a Service

SaaS-y

Let’s talk about Software as a Service. Before 2011, if you wanted to use Adobe’s Illustrator or Photoshop products, you would buy a license. This license granted you legal access to a version of the software that you wanted to use, in perpetuity. That particular purchased version was yours to use, forever. With the license, you could install the software on a set number of devices. If you wanted to upgrade the software, you’d purchase the upgrade at a reduced price from the full version.

This paradigm was common at the time. Microsoft Office used it, Autodesk used it, Quickbooks, McAfee, Apple’s Final Cut Pro. Software was treated quite like any other product, whereupon purchasing, it was owned by the purchaser, who retained rights to use of the product. Then things started to change. In 2011, Adobe announced the “Creative Cloud”, which allowed users to instead choose subscription over purchase of their products.

A subscription entitled you to access of products like Photoshop and Illustrator, so long as you paid regular installments of an agreed upon price. In 2013, Adobe announced that it would cease the sale of perpetual licenses, completing its shift towards a model that we now call “Software as a Service”, or “SaaS”, pronounced “sass”. This is by far the most common model for paid software distribution, and for good reason.

Besides the strange nature of software itself, being closer to intellectual property than physical product, SaaS makes things simpler. If you want to use an app, just sign up, connect your billing information, and get to work. You can expect on-demand access to the software, regular patches and bug fixes, as well as access to updates without any additional payment necessary. So, that’s how we got SaaS.

Subscription-based Agents

So, if Software as a Service is subscription-based access to software, then Agents as a Service would be subscription-based access to agents. I fully expect to see this paradigm gain signficant prominence in the industry in the coming years, and in some ways it’s already here. In early September 2024, Salesforce announced their new product, Agentforce.

“Humans with agents drive customer success together. Build and customize autonomous AI agents to support your employees and customers 24/7.”

- Agentforce

Agentforce allows users to create custom agents. Autonomous agents. This isn’t another Copilot either–although they have one of those too–but rather a system to create independently useful agents, which don’t require human supervision to complete tasks and add value to your company. Simply give the agent a description of its role, connect it to a datasource, allow it to perform certain actions, define its guidelines, and viola, you have an agent.

Salesforce isn’t the only company doing this, either. Oracle, Google, Zapier, Second, IBM, and others all have their own version of an agent builder, which looks much the same as Agentforce. That is to say, Agents as a Service is not just the paradigm of the future, but it’s already here. Companies aren’t just making agent builders, but they’re using agents as well.

According to Salesforce, OpenTable managed to boost their customer service KPIs using Agentforce instead of their previous solution. The company, which creates restaurant software, piloted Agentforce on tasks such as reservation changes and loyalty point redemptions. Apparently–and we’re just going off of marketing material here–this helped OpenTable a lot. According to George Pokorny, Senior VP of Global Customer Success at OpenTable:

“Agentforce will handle simple inquiries automatically, so our agents can focus on delivering superior service. We quickly understand the reason for contact and know how best to assist.”

The big problem

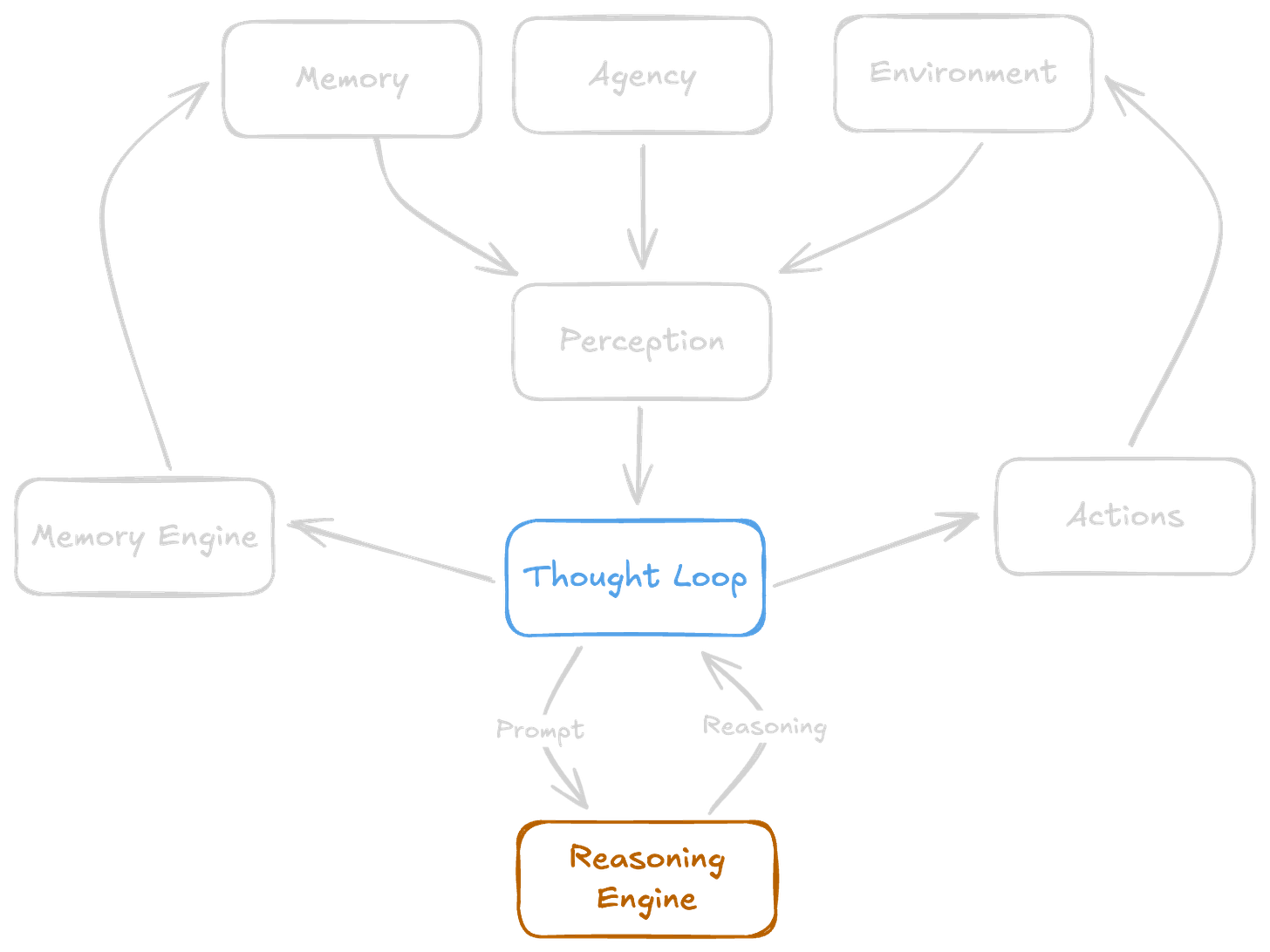

Unfortunately, however, things aren’t so simple. Besides the unfortunate pronunciation of the acronym, Agents as a Service has a big problem: reliability. For reference, the anatomy of an autonomous agent generally looks something like this:

Noting that this is a generalization, the above visualization demonstrates the agent loop. The loop consists of an input of some kind of perception, or prompt, which is recursively sent to a reasoning engine, which decides which actions to take. The actions taken and results are piped back into the perception of the reasoning engine, and the cycle repeats.

However, for any machine, core components act as both mechanisms and bottlenecks for performance. In our case, we can build our tools to be robust, and build agentic systems around the very best models on the market. However, the reasoning engine will always be a bottleneck to agent performance, and reasoning engines have performance issues. Language models are the bottleneck.

A recent study [1] introduced a new benchmark called SimpleBench, which evaluates the performance of language models on 200 specially designed text-based reasoning tasks. The benchmark consists of multiple-choice questions that focus on areas like spatial reasoning, social intelligence, and linguistic adversarial robustness (trick questions). On this benchmark, which you can find here, the average human score is 83.7%, which acts as a baseline for comparison with models.

The top performing model on SimpleBench is OpenAI’s latest model, O1 Preview, which recieved a record score of… 41.7%. So yeah, that’s approximately half the performance of the average human on this specific benchmark. Anthropic’s Claude-3.5-Sonnet 10-22 is a close second at time of writing, with a score of 41.4%. From there, every other model scores below 30% on SimpleBench. That’s a serious problem with reasoning.

The reason why SimpleBench, of all benchmarks, is an important indicator of the bottleneck in language model performance, is because SimpleBench specifically shows a significant discrepency between human and model performance. Language models perform pretty well at the most popular benchmarks at this point. The leading models all score around 90% on MMLU and Human Eval, and around 75% in math. However, SimpleBench, which relies on a combination of logic problems and trick questions, exploits a central weakness of language models: common sense.

Language models are not human, they’re not designed like humans and they don’t work like humans. All a language model is, is a learned approximation of human language by a universal function approximator. So, the text that a language model generates will likely look right, even if it’s not actually right. Thus, language models can reason accurately on in-distribution tasks quite well, as their training has prepared them for it. However, with out-of-distribution tasks and prompts, a model will struggle to generalize. Out-of-distribution is a much larger set than in-distribution.

Thus, the needle will likely continue to move towards higher performance as models are optimized, and more compute is dedicated to training. However, while we wait, agents will retain sub-human performance on a wide range of tasks. This, therefore, begs the question: would you trust a language model to be responsible for a value center of your business?

For some, the answer will be yes. Customer service has already been largely automated, and given the relatively straightforward nature of the tasks, it’s possible that this domain will continue to be automated by language-model-based Agent-as-a-Service companies. However, for more complex tasks like software development, things won’t be so simple.

(Author’s Note: just to avoid any confusion, SimpleBench and Simple Agent are not affiliated. Even though the word ‘Simple’ does mean the same thing in both contexts.)

Differentiation

While it may be a while before responsibility for value centers can be entirely handled by autonomous agents, we could still see a serious amount of value from this industry. First of all, agents are fun, and they can actually do valuable work. In creative fields, I’d say that agents function best for generating supplemental materials, for use by humans. For example, in researching for this article, I used a combination of Perplexity and Simple Agent to generate fact sheets and reports.

This kind of supplemental application is likely how we achieve real value-add from agent integrations, and for those who are interested in selling their own agent services, how you differentiate your business. The classic business advice applies here. Find a niche, ensure founder-market fit, make something that people want, etc. However, remember the non-negotiable constraints of language-models, and their derivative products.

This is the difference between building something like an entirely autonomous software engineer (sorry Devin), and building a tool like Second.dev, which handles code migrations. The difference is the problem-set which your tool is designed to be a solution for, and too wide of a problem-set is going to make providing a solution a near-impossible task, especially given the unreliability of language models. So, for those of us interested in this upcoming industry, the future is bright, but exercise constraint in solutions.

Author’s Note

Thank you so much for reading. I’ll cut to the chase: I want JARVIS so bad. Honestly, my big goal in life right now is to effectively create a JARVIS-like assistant that will act as my assistant in all areas of my life, and hold me accountable. This is going to be tricky, but I’m optimistic, and right now just focusing on laying the groundwork.

However, I figure other people might be interested in this kind of thing too, which is why I started researching Agents as a Service as a paradigm. It’s in its infancy, but it’s certainly here, and will only grow. I, for one, am excited to see that play out.

With that said, as always, thank you again for reading, and I’ll see you next time. Goodbye.

Further Reading

Since we’re on the topic, here are some other articles which you might be interested in.

How to Successfully Integrate Language Models into Your Application

Bouvet Island is a volcanic land formation located approximately 1,100 miles (1,700 km) north of Antarctica, and 1,600 miles (2,600 km) southwest from the southern tip of Africa. It is considered one of the most remote places on the planet, given its location and the harsh difficulty of access.

What Makes a Mind? Part 1: Exploring Intelligence through Juxtaposition of Man and Machine.

In his 1950 paper “Computing Machinery and Intelligence,” mathematician Alan Turing considered the following question:

How Software Development will become Automated.

Back in March 2024, tech circles blew up with the announcement of Cognition Labs’ Devin. Marketed as “The First AI Software Engineer”, Devin is an autonomous agent, apparently capable of making contributions to codebases through solving GitHub issues, or chatting with a user. Around the same time, Magic.dev had announced a $23 million funding round, wit…

Credits

Thumbnail:

Bilal Azhar at https://substack.com/@intelligenceimaginarium

Music: Track - Feeling Good by Pufino, Source - https://freetouse.com/music, Free Music No Copyright (Safe)

References and Citations

[1] Philip and Hemang. (2024). SimpleBench: The Text Benchmark in which Unspecialized Human Performance Exceeds that of Current Frontier Models.